#include <lpboost.h>

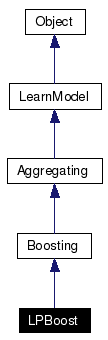

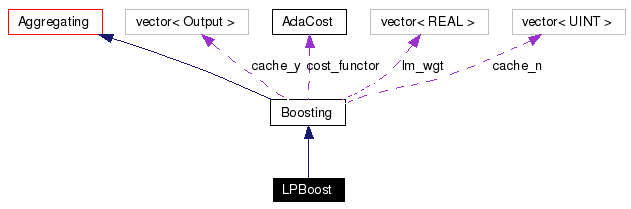

Inheritance diagram for LPBoost:

Public Member Functions | |

| LPBoost () | |

| LPBoost (const Boosting &s) | |

| LPBoost (std::istream &is) | |

| virtual const id_t & | id () const |

| virtual LPBoost * | create () const |

| Create a new object using the default constructor. | |

| virtual LPBoost * | clone () const |

| Create a new object by replicating itself. | |

| virtual bool | set_aggregation_size (UINT) |

| virtual void | train () |

| Train with preset data set and sample weight. | |

| REAL | C () const |

| The regularization constant C. | |

| void | set_C (REAL _C) |

| Set the regularization constant C. | |

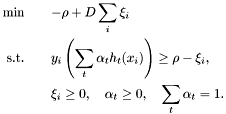

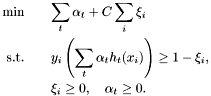

With a similar idea to the original LPBoost [1], which solves

we instead implement the algorithm to solve

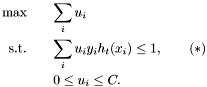

by column generation. Note that the dual problem is

Column generation corresponds to generating the constraints (*). We actually use individual upper bound  proportional to example's initial weight.

proportional to example's initial weight.

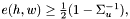

If we treat  , the normalized version of

, the normalized version of  , as the sample weight, and

, as the sample weight, and  as the normalization constraint, (*) is the same as

as the normalization constraint, (*) is the same as

![\[ \Sigma_u (1 - 2 e(h_t, w)) \le 1, \]](form_32.png)

which means

![\[ e(h_t, w) \ge \frac12 (1 - \Sigma_u^{-1}).\qquad (**) \]](form_33.png)

Assume that we have found  so far, solving the dual problem with

so far, solving the dual problem with  (*) constraints gives us

(*) constraints gives us  . If for every remaining

. If for every remaining  in

in  ,

,  the duality condition tells us that even if we set

the duality condition tells us that even if we set  for those remaining

for those remaining  , the solution is still optimal. Thus, we can train the weak learner with sample weight

, the solution is still optimal. Thus, we can train the weak learner with sample weight  in each iteration, and terminate if the best hypothesis has satisfied (**).

in each iteration, and terminate if the best hypothesis has satisfied (**).

[1] A. Demiriz, K. P. Bennett, and J. Shawe-Taylor. Linear programming boosting via column generation. Machine Learning, 46(1-3):225-254, 2002.

Definition at line 59 of file lpboost.h.

|

|

Definition at line 63 of file lpboost.h. References LPBoost::set_C(). Referenced by LPBoost::clone(), and LPBoost::create(). |

|

|

Definition at line 64 of file lpboost.h. References Boosting::convex, and LPBoost::set_C(). |

|

|

|

|

|

The regularization constant C.

|

|

|

Create a new object by replicating itself.

return new Derived(*this);

Reimplemented from Boosting. Definition at line 69 of file lpboost.h. References LPBoost::LPBoost(). |

|

|

Create a new object using the default constructor. The code for a derived class Derived is always return new Derived(); Reimplemented from Boosting. Definition at line 68 of file lpboost.h. References LPBoost::LPBoost(). |

|

|

Reimplemented from Boosting. |

|

|

Reimplemented from Aggregating. |

|

|

Set the regularization constant C.

Definition at line 78 of file lpboost.h. Referenced by LPBoost::LPBoost(). |

|

|

Train with preset data set and sample weight.

Reimplemented from Boosting. Definition at line 66 of file lpboost.cpp. References Boosting::grad_desc_view, Aggregating::lm_base, LearnModel::n_samples, LearnModel::ptd, LearnModel::ptw, LearnModel::set_dimensions(), and U. |

1.4.6

1.4.6