#include <cgboost.h>

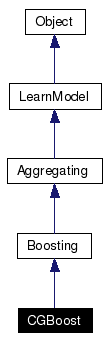

Inheritance diagram for CGBoost:

Public Member Functions | |

| CGBoost (bool cvx=false, const cost::Cost &c=cost::_cost) | |

| CGBoost (const Boosting &s) | |

| CGBoost (std::istream &is) | |

| virtual const id_t & | id () const |

| virtual CGBoost * | create () const |

| Create a new object using the default constructor. | |

| virtual CGBoost * | clone () const |

| Create a new object by replicating itself. | |

| virtual bool | set_aggregation_size (UINT) |

| Specify the number of hypotheses used in aggregating. | |

| virtual void | train () |

| Train with preset data set and sample weight. | |

| virtual void | reset () |

Protected Member Functions | |

| virtual void | train_gd () |

| Training using gradient-descent. | |

| virtual REAL | linear_weight (const DataWgt &, const LearnModel &) |

| virtual void | linear_smpwgt (DataWgt &) |

| virtual bool | serialize (std::ostream &, ver_list &) const |

| virtual bool | unserialize (std::istream &, ver_list &, const id_t &=NIL_ID) |

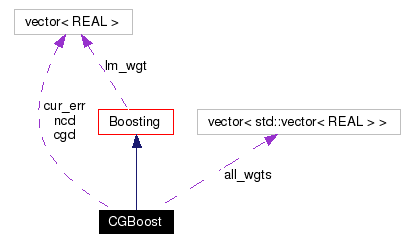

Protected Attributes | |

| std::vector< REAL > | ncd |

where where  | |

| std::vector< REAL > | cgd |

where where  | |

| std::vector< REAL > | cur_err |

| data only valid within training (remove?) | |

Friends | |

| struct | _boost_cg |

This class provides two ways to implement the conjugate gradient idea in the Boosting frame.

The first way is to manipulate the sample weight.

The other way is to adjust the projected search direction f. The adjusted direction is also a linear combination of weak learners. We prefer this way (by use_gradient_descent(true)).

Differences between AdaBoost and CGBoost (gradient descent view):

Definition at line 36 of file cgboost.h.

|

||||||||||||

|

Definition at line 46 of file cgboost.h. Referenced by CGBoost::clone(), and CGBoost::create(). |

|

|

Definition at line 48 of file cgboost.h. References Boosting::lm_wgt. |

|

|

|

|

|

Create a new object by replicating itself.

return new Derived(*this);

Reimplemented from Boosting. Definition at line 57 of file cgboost.h. References CGBoost::CGBoost(). |

|

|

Create a new object using the default constructor. The code for a derived class Derived is always return new Derived(); Reimplemented from Boosting. Definition at line 56 of file cgboost.h. References CGBoost::CGBoost(). |

|

|

Reimplemented from Boosting. |

|

|

ncd is actually

It can be iteratively computed as

Reimplemented from Boosting. Definition at line 141 of file cgboost.cpp. References CGBoost::cur_err, EPSILON, Boosting::get_output(), Boosting::lm_wgt, Aggregating::n_in_agg, LearnModel::n_samples, CGBoost::ncd, LearnModel::ptd, and LearnModel::ptw. |

|

||||||||||||

|

Reimplemented from Boosting. Definition at line 108 of file cgboost.cpp. References LearnModel::c_error(), CGBoost::cur_err, LearnModel::exact_dimensions(), LearnModel::get_output(), LearnModel::n_samples, CGBoost::ncd, LearnModel::ptd, and LearnModel::train_data(). |

|

|

Delete learning models stored in lm. This is only used in operator= and load().

Reimplemented from Boosting. Definition at line 21 of file cgboost.cpp. References Boosting::reset(). |

|

||||||||||||

|

Reimplemented from Boosting. Definition at line 38 of file cgboost.cpp. References Boosting::grad_desc_view, SERIALIZE_PARENT, and Aggregating::size(). |

|

|

Specify the number of hypotheses used in aggregating.

Reimplemented from Aggregating. Definition at line 26 of file cgboost.cpp. References Boosting::clear_cache(), Boosting::grad_desc_view, Boosting::lm_wgt, Aggregating::set_aggregation_size(), and Aggregating::size(). |

|

|

Train with preset data set and sample weight.

Reimplemented from Boosting. Definition at line 83 of file cgboost.cpp. References CGBoost::cgd, CGBoost::cur_err, Boosting::grad_desc_view, LearnModel::n_samples, CGBoost::ncd, LearnModel::ptw, and Boosting::train(). |

|

|

Training using gradient-descent.

Reimplemented from Boosting. Definition at line 97 of file cgboost.cpp. References Boosting::convex, and lemga::iterative_optimize(). |

|

||||||||||||||||

|

Reimplemented from Boosting. Definition at line 56 of file cgboost.cpp. References Boosting::lm_wgt, Object::NIL_ID, Aggregating::size(), UNSERIALIZE_PARENT, and Boosting::use_gradient_descent(). |

|

|

|

|

|

Definition at line 42 of file cgboost.h. Referenced by CGBoost::train(). |

|

|

data only valid within training (remove?)

Definition at line 65 of file cgboost.h. Referenced by CGBoost::linear_smpwgt(), CGBoost::linear_weight(), and CGBoost::train(). |

|

|

Definition at line 42 of file cgboost.h. Referenced by CGBoost::linear_smpwgt(), CGBoost::linear_weight(), and CGBoost::train(). |

1.4.6

1.4.6